Reflective Design Thinking

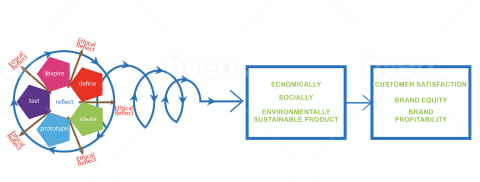

Figure 1: Reflective Design Thinking and its outcomes

The information provided here is based on ongoing research being conducted by Professor Minu Kumar, and his research colleagues. It builds on previos work on Design Thinking (DT) by various authors and practitioners but particularly emphasizes the idea of reflective practice (c.f. Schon 2017). In line with Donald Schon’s suggestions for “reflection in practice”, the process of Ethical DT hinges on reflection at various key inflection points called “Ethical Reflect” (See Figure 1 below). The reflection is facilitated with various questions for the team to think through as they proceed with the project.

Disclaimer: Please keep in mind that the contents of this page are meant to be tools to inspire discussion amongst students and practitioners of design thinking & product development as opposed to any other purpose. If you have feedback on the tools below, please email me (mkumar@sfsu.edu). We welcome your feedback such that we can further improve these tools.

REFLECT: INTEGRATING ETHICAL-REFLECTION INTO THE DESIGN THINKING FRAMEWORK

Design Thinking (DT) has generated significant attention in business practice and research and has been promoted as a methodology well-suited to tackle the challenges faced by business organizations in new product design and development and innovation in general. However, the efficacy of the method, as practiced by professionals in business, has been questioned given that the process has sometimes been used to develop products that are either non-innovative, ethically questionable, or both. For example, the products developed by the company Juul used the DT process purportedly to help users quit smoking. In other words, it was supposed to function as a smoking cessation product, however, in reality the product is used in other ways predominantly by groups of users who have never smoked before or are new to smoking. Such examples abound in practice.

The goal of sharing this page is to have developers and firm leadership engage in difficult discussions such that due care is performed and more novel AND meaningful solutions are allowed to emerge. Liedtka (2015) notes that "while science and design are both hypothesis driven, the design hypothesis differs from the scientific hypothesis, according the process of abduction a key role." Given that design often deals with issues that are paradoxical nature as it seeks to find higher-order solutions that accommodate seemingly opposite forces, it often creates novel forms/solutions through abductive reasoning. However, to make the novel solutions meaningful, a considerable amount of reflection needs to happen. Past research (e.g., Siedel and Fixson 2013) have demonstrated that teams that engage in more reflection create more meaningful solutions. We think that the way Design Thinking is being practiced currently (by companies that emphasize "move fast and break things") has relegated the "thinking" component to an afterthought. Furthermore, current innovation practices are resulting in firms that are often criticized for not doing due diligence (e.g., Artificial Intelligence products that have no embedded sentience and sapience) and privatizing profits and socializing risks (e.g., Purdue Pharma). Therefore, there is a need for design thinkers and product developers to reflect on their decisions during the DT process or new product development (NPD) process such that they can minimize future risks their products can create to various stakeholders.

In this page we provide teachers and practitioners of DT several tools that can help them engage in this reflection during their decision making process.

Step 1: Sensitizing the team about ethics in DT/NPD

The development team is sensitized to the topic of ethics using one or two of the scenarios provided in column three of Table 1. The facilitator can choose one or two of the scenarios and conduct the discussion based on one or two of the ethical approaches provided in column 1.

Table 1: An overview of ethical philosophy considerations in the context of design thinking

|

Approaches to Ethical thinking |

Basic idea |

Examples and questions to discuss |

|

Utilitarian approach |

In the utilitarian approach the aim is to produce most good and ethical conduct is the action that will achieve this end. The medical ethics of beneficience (maximize beneficial effects of a treatment while minimizing its side effects) fits into this approach. |

Discuss products that help (functionally, environmentally, or financially) a majority of the people but cause harm to a small group of people. For example, a new drug whose clinical studies show that it relieves chronic excruciating pain in the joints for about 90 patients (with enormous improvement of quality of life and significantly better than the current standard of care), kills four patients, and has no effect on the rest out of every hundred patients. Would you move forward with its development? Follow up questions: Does the time for the effect to manifest matter? Does the socio-economic status of the user matter? |

|

Rights and responsibilities approach |

In the rights approach, the aim is to best protect and respect the moral rights of those affected and actions that achieve those ends are seen as ethical. Issues of Autonomy, auditability, and Transparency relating to technology and medical practice relate to this approach. |

Discuss the issue of a person being able to choose or not choose a medical treatment even if it is beneficial or not beneficial to them. For example, should a home aid working with elderly immune compromised individuals afforded the choice to be/not to be vaccinated against a deadly new corona virus?

|

|

Fairness and justice |

The fairness and justice approach follows the principle that all equals should be treated equally and decisions and actions that lead to such treatment would be considered ethical. |

Discuss the scenario where your company is developing a niche and expensive service that allows their target personas (wealthy couples living in a country where the top 1% of households hold 60% of the country's wealth, while the bottom 50% hold 2%) to genetically engineer embryos such that they can have better looking, more athletic, and smarter offspring. Should your company move forward with this? Why or why not? |

|

Common good approach |

In the common good approach, attention is given to the common conditions that are important to the welfare of everyone and life in a community is a good in itself. A concern for the environment is often axiomatic to this approach. |

Discuss the issue of developing infrastructure products with universal design (such as universal usability, access, and affordability of products) such as libraries, roads, education, and healthcare. For example, should government provide college education universally? Should a non-governmental entity provide universal access to college? |

|

Virtue oriented approach |

The aim of this approach is to develop one’s character and ethical conduct (such as doing no harm) would be what a virtuous person would do in the circumstances. |

A company developing a product that help spouses cheat on one another may be deemed immoral and unethical by some who believe in monogamy and marriages but would be permissible for people who do not believe in monogamy and the societal institution of marriage (because they think that humans are not wired to be monogamous). Whose virtue is ethical? |

|

Other issues to consider about technology and design |

Sentience & sapience: Sentience is the capacity of a system to feel pain and suffering and sapience is a set of capacities associated for self-awareness and for being a reason‐responsive agent |

Do the stakeholders, the systems/technologies serving the stakeholders have sentience and sapience? If not, do they have moral authority to make decisions affecting stakeholders? For example, consider a machine learning and automated tool in Fintech that automatically (without human oversight) determines whom to approve for a home loan application from a pool of applicants from different socio-economic statuses. Should such a product be developed and deployed? |

After the Discussion using the scenarios, the facilitator can continue the discussion posing the following questions:

- In the context of your project, please explore if the team should adopt any particular ethical approach. Why or why not?

- In the context of your project, are some ethical approaches more important to use than the others? Why or why not?

- In the context of your project, are there critical “inflection” points where you should explore ethics-related questions? Why or why not?

This is done preferably at Inflection Point 1 in Figure 1.

Step 2: Refelection at various inflection points

At each Inflection Point, we recommend that the project manager present Ethical Reflect questions to surface relevant issues. For example, in the Define stage, the team members can be presented with any or some of the questions (based on relevance) posed in the Define Section. It needs to be emphasized that this is but one formulation of the set of questions for Reflect that can being used. We encourage instructors and practitioners to develop their own based on the question development framework we provided that are more contextually relevant to their needs. Please also note that in Tables below we present reflection questions that would be normally encountered during the DT process (column 2) and contrast it with questions that have ethical content (column 4).

Tool 1: For Inquire and Inspire Stage

Tools 2 through 9 are intended to help the developers think through the ethical issues that arise durung DT/NPD. Please keep in mind all these questions or tools may not be applicable to the context of your project. If there is a need for the team to reflect during the inquire and inspire stage, the questions below can aid in the reflection. Please note that in each of the tools (2-9) we make a distinction between regular reflection questions and questions that have ethical implications. Also, note that these are mere examples of questions one could ask and that teams should develop their own questions based on the context of their projects.

|

Stage of process |

Example of often-used reflection questions in practice |

Approaches to ethical Reflection |

Examples of additional ethical reflection questions to consider at inflection points |

|

Inquire and inspire: The stage involves getting the designers to gain empathetic understanding of the problem they are trying to solve, typically through user research and develop the inspiration to define the problems and develop solutions.

|

Why do we need to innovate? What is the context within which we are trying to innovate? Have we talked with enough users? Have we adequately engaged the stakeholders to gain their perspective? What implicit needs did you notice? Why did we think we noticed these particular needs? Have we identified both explicit and implicit needs? If we are using user data to identify needs; (a) do we have the kinds of data? (b) have we collected and analyzed the data scientifically? |

Utilitarian approach |

Have we considered all the benefits and harms of talking to the users we have talked to and have not talked to? Are some stakeholders less important than the others? If so, why? |

|

Rights and responsibilities approach |

Have we considered who gets a say on what ought to be designed? Are we ok with leaving some people out of the designing process? What are the rights of the stakeholders we are conducting the research on and what are our responsibilities towards them? |

||

|

Fairness and justice |

Did we consider if the stakeholders in our process are equals? Are there structural inequities in the context we are drawing our data from? If yes, have we factored-in these inequalities? |

||

|

Common good approach |

Did we identify the common needs for everyone in society and the shared environment? Is the sample we used representative of the population we intend to serve? |

||

|

Virtue oriented approach |

Should we absolutely avoid talking to any group of people/groups/stakeholders? Why or why not? What are our own biases in conducting this research and how does it affect our research process? |

||

|

Other technology considerations |

If we are using user crowdsourced data/big data sets to identify needs, did we consider the possibility that the data may have systemic biases baked into it? How might we adjust or user research to accommodate these biases? |

||

|

Gestalt considerations |

Did we consider the environment and society as an important stakeholder at this stage of the process? Do we need to? Why or why not? |

Tool 3: Problem Definition Stage

If there is a need for the team to reflect when they are defining the problem, the questions below can aid in the reflection. Please note that in the table below we make a distinction between regular reflection questions and questions that have ethical implications. Also, note that these are mere examples of questions one could ask and that teams should develop their own questions based on the context of their projects.

|

Define: This stage involves defining “wicked problems” arising from contextually-relevant user needs to solve through reflection. |

Are the user needs/problems well defined? Why do we need to solve that user problem? How and why did you notice this problem? Who are you innovating for? Are the personas well defined? Are the personas representative of the users we want to serve? |

Utilitarian approach |

Did we consider the benefits and harms of problem framing the way we have it right now? |

|

Rights and responsibilities approach |

Did we consider our responsibilities when we frame and define the problem as we do as opposed to other alternative ways? |

||

|

Fairness and justice |

Did we leave out any major groups who could benefit from this process in our persona development? What are our own biases in defining the personas and their related problems statements? How do we mitigate these biases? |

||

|

Common good approach |

Did the personas and the problems we chose help us serve a common good we would like to achieve? Is that important to us? Why or why not? |

||

|

Virtue oriented approach |

Is there anything inherently evil about the way have framed and defined our problem? Does the problem we are trying to solve, meet our standards of importance and virtue? Does it meet the cultural standards of virtue of the context we are designing into? |

||

|

Other technology considerations |

Does the technology and data sources we used to collect data to define the problem and personas, perpetuate structural inequities in society? |

||

|

Gestalt considerations |

Did the way we define the problem leave out societal and environmental considerations? If yes, why? |

Tool 4: Ideation Stage

If there is a need for the team to reflect during ideation, the questions below can aid in the reflection. Please note that in the table below we make a distinction between regular reflection questions and questions that have ethical implications. Also, note that these are mere examples of questions one could ask and that teams should develop their own questions based on the context of their projects.

|

Ideate: Broken into two steps;

Ideation: Ideation uses unrestrained creativity, abductive reasoning, and to solve wicked problems. It usually involves framing the questions in the “how might we…” format such that multiple possible solutions can be explored and reflected on in a focused and nonlinear fashion despite the presence of high ambiguity. We encourage design thinkers not to consider any ethical or environmental consequences at this stage.

Idea selection: ideas are put through the filter of what the team thinks would be valuable to the user and what would be feasible and a smaller subset of ideas are selected to move forward in the process.

|

Ideation: Have we challenged the underlying assumptions based on which current solutions are built? Have we created a lot of ideas (quantity breeds quality)? Have we provided enough creative freedom for an acceptable quantity of “wild” ideas to emerge?

Idea selection: Have we selected an idea that fits the persona well and solves their problem? Can this idea be prototyped and tested such that the value it provides to the user can be understood and, perhaps, measured? Can it be scaled?

|

Utilitarian approach |

During idea selection: Did we consider the balance between risk and benefit for users? |

|

Rights and responsibilities approach |

During idea selection: Did we violate anyone’s (individuals, groups, classes of people) rights with this solution? Do we have a person responsible for making sure that we don’t violate rights? |

||

|

Fairness and justice |

During idea selection: Whose ideas were solicited in the course of our design process? What is their stake? Are we ok with not asking certain stakeholders (individuals, groups, classes of people) for their inputs? Did we consider the following questions: Who gets to select the ideas? Who does not? Are we ok with Are we ok with not including certain stakeholders (individuals, groups, classes of people) during idea selection? |

||

|

Common good approach |

During idea selection: Did we consider if everyone, society, and environment benefits from this solution? Why or why not? Does this solution harm some stakeholders or groups of stakeholders? What unintended consequences might this have to groups of users or society as a whole? Have we considered the ways this product can be misused by users? |

||

|

Virtue oriented approach |

During idea selection: Is this solution evil? Is this solution a reflection of who we are as a company our mission and vision? Is this solution a reflection of what we would consider virtuous? |

||

|

Other technology considerations |

During idea selection: Have we sufficiently considered how our project might impact the environment it is placed in? At the immediate level of use? At the local/network level? At the global level/scale? If the solution/system involves a high degree of usage of automation (e.g., Artificial Intelligence or robotics) will it have the capacity for sentience and sapience? If not, what implications does it have for the solution to make ethical decisions when in operation? |

||

|

Gestalt considerations |

During idea selection: Did we consider the broader environment, and society as an important stakeholder while we ideate solutions and select ideas to move forward? Do we need to? Why or why not? |

Tool 5: Prototyping Stage

If there is a need for the team to reflect when they are developing prototypes, the questions below can aid in the reflection. Please note that in the table below we make a distinction between regular reflection questions and questions that have ethical implications. Also, note that these are mere examples of questions one could ask and that teams should develop their own questions based on the context of their projects.

|

Prototype: In this step ideas are given form, and prototypes (resolution of the prototype depends on the design iteration the team is on) are developed.

|

To what extent does the prototype capture the experience of our solution? Will the prototype actually solve user problems? What questions can the prototype answer? What can it not? Is this prototype free of bugs or does it need further refinement? |

Utilitarian approach |

Did we consider the balance between risks and benefits that this prototype provides for users? |

|

Rights and responsibilities approach |

Did we violate anyone’s (individuals, groups, classes of people) rights with the development of this prototype? What are our responsibilities while making this prototype? |

||

|

Fairness and justice |

Did we consider who gets to prototype this product concept? What is their stake? Are we ok with leaving out stakeholders (individuals, groups, classes of people) during prototyping? |

||

|

Common good approach |

Did the development of this prototype harm anyone who uses it in any way? Does it harm the environment or society in general? |

||

|

Virtue oriented approach |

Is this prototype evil? Is this prototype a reflection of who we are as a company, our mission and vision? Is this prototype a reflection of what we would consider virtuous? |

||

|

Other technology considerations |

Have we sufficiently considered how this prototype might impact the environment? At the immediate level of use? At the local/network level? At the global level/scale? If the prototype involves a high degree of usage of automation (e.g., Artificial Intelligence or robotics) will it have the capacity for sentience and sapience? If not, what implications does it have for the solution to resolve ethical dilemmas when deployed and when in operation? |

||

|

Gestalt considerations |

Have we considered all our societal and environmental responsibilities here? Who gets to make the final decisions on moving forward with this development? How do they benefit from it? If something goes wrong, will they be ok with being held responsible individually or as a team? |

Tool 6: Testing Stage

Testing often brings up issues of ethics. Who should we test with? How do we conduct the test? Double blind tests or single blind? etc. Please note that in the table below we make a distinction between regular reflection questions and questions that have ethical implications. Also, note that these are mere examples of questions one could ask and that teams should develop their own questions based on the context of their projects.

|

Test: Testers test the complete product using the best solutions identified in the Prototype phase. The data is analyzed and the prototypes are refined.

|

Did we use valid and reliable instruments for measurements in your tests? Did we use a representative sample in your tests? Were our tests scientifically designed? Was our data analysis rigorous? Did the tests show that the prototype actually solved user problem as defined? Did the tests reveal: (a) the most important features of your design? (b) What made your design unique? (c) what did the testers feel about your solution? (d) If you could redesign the test again, what would you do differently? (c) how might we refine the prototype? Is this good enough?

|

Utilitarian approach |

Did we sufficiently test to unearth the benefits and risks that testers incur? Did we adequately consider how we balance risks and benefits for the testers? Did we tested long enough to unearth the major risks? |

|

Rights and responsibilities approach |

Did we inform ourselves about all the rights and responsibilities of testers? Did we violate any of their rights? If we violated any rights, what are our responsibilities towards them? Did we adequately consider who is being included in the tests and who is not? Who are we ok with not including (individuals, groups, classes of people)? What biases will that create in our product offereing in the marketplace? |

||

|

Fairness and justice |

Did everyone, society, and environment benefit from this test? Did this test harm some stakeholders or groups of stakeholders more than some others? What unintended consequences might this have to testers or society as a whole? |

||

|

Common good approach |

Did we sufficiently considered how our project might impact non users of the product and the environment it is placed in the long run? At the immediate level of use? At the local/network level? At the global level/scale? |

||

|

Virtue oriented approach |

Is this testing method evil? Would I sign up for this test myself? Why or why not? Did the test cause harm (intended or unintended)? Was the product used in ways it was not intended to be used? Was the product misused by users in our tests? If yes, how do we create fail safes for such misuse? Is this type of testing a reflection of who we are? Is this type of testing a reflection of what we would consider virtuous? |

||

|

Other technology considerations |

If the testing involves a high degree of usage of automation (e.g., Artificial Intelligence or robotics) will it have the capacity for sentience and sapience? If not, what implications does it have for the solution to make ethical decisions when in operation? How might we add sentience and sapience to the prototype? |

||

|

Gestalt considerations |

Have we sufficiently considered how our project might impact the environment it is placed in in the long run? At the immediate level of use? At the local level? At the global level? |

Tool 7 Scaling Stage

New technology typically helps users increase their human capacities by (a) providing them the ability to accomplish something they were never able to do before, or (b) being able do a task they were able to accomplish before but more efficiently or effectively. When multiple users are able to use technologies in such a way that it creates a network effect where large groups of people and, often times, the whole society can benefit. However, when technology inherently has intended/unintended consequences that are undesirable, the network effects multiply (and hurt groups of people or society as a whole) with scale and firms need to develop safeguards to prevent the negative effects from scaling. This path is often difficult, inconvenient, less profitable in the short run (but we would argue more profitable in the long run) and may even lead to slower growth, but it is the right thing to do. The questions below will help teams frame “how might we” questions that balance ethics and the need to scale. So, we strongly reccomend that all products regardless of context should consider some or all of the questions below. We also reccomend that you develop other context-relevant questions too.

|

Scaling: Scaling typically involves onboarding a vastly greater number of users, taking on a larger workload but the company serves without compromising performance or losing revenue. |

Do we have the technology to scale? Do we have the financial resources to scale? Do we have the right talent to scale? |

Gestalt considerations |

How do we scale the ethical considerations along with or proportional to the scale we want to achieve? When scaling what are the positive and negative consequences of network effects on individual users, groups of users, and society as a whole? Do we have appropriate metrics (as we have for number of users, revenues etc) to measure how well ethical considerations have scaled? How can our mission (if it is a mission-driven company) be scaled along with or proportional to the scale we want to achieve?

One particularly useful exercise we find useful, at this stage, is having the team ideate the various ways individuals, groups or governments can misuse the product. This is then followed by another exercise of ideating the ways the product can be harmful to groups of people or society and environment in general. Following these exercises teams tweak the design to try to mitigate or eliminate such negative usages and negative effects. Please see this example of such an exercise involving multiple product development teams. Copy-paste this link: https://www.dropbox.com/s/bl39k0p5tnh67al/Malicious_Use%20workshop.pptx?... |

TOOL 8: Pivoting

Pivoting is often necessary for products and especially for start-ups. Due diligence should be done when pivoting the product. Therefore, please consider the following questions as you pivot. Facebook and similar platforms that use users/user data as products that went from a place to create "community" to a broadcasting platform (with none of the regulations applied to other broadcast media applying to them) and the effect they have had on usurping democracy are a cautionary tale here. Please note that in the table below we make a distinction between regular reflection questions and questions that have ethical implications. Also, note that these are mere examples of questions one could ask and that teams should develop their own questions based on the context of their projects.

|

Pivoting: Is a shift in the business strategy, typically based on feedback given either by the users or experts, to steer the venture into a more profitable or desirable situation. |

Why are pivoting? Is it necessary? Do we have data to support the need to pivot? Has there been a change in the market needs? Has there been a dramatic change in technology? Has there been a dramatic change in the competitive landscape? |

Utilitarian approach |

Is this pivot primarily to increase, scale, revenue, and profits at the expense of other considerations? If so, have we considered all the ethical and environmental considerations we previously incorporated into our solution? Why or why not? Have we considered the unintended consequences of this pivot to all the stakeholders? |

|

Rights and responsibilities approach |

Will this pivot violate the rights or exploit certain subset of users while giving us access to other or more users? |

||

|

Fairness and justice |

Will this pivot be exploitative of certain stakeholders? |

||

|

Common good approach |

Is this pivot creating negative effects for large sections of society and the environment? |

||

|

Virtue oriented approach |

Has this pivot resulted in taking us further away from our previously held ethical positions? Is this pivot leading away from our core values? |

||

|

Other technology considerations |

Is this pivot adding scale? If so, have we considered how will ethical problems we previously considered and found problematic scale with this pivot? |

||

|

Gestalt considerations |

If there are negative effects to the individuals, groups of users, society or environment as a result of this pivot, are we ok with how we are making extra revenues based on this pivot? Why or why not? Who will be legally responsible in our company for creating such negative consequences? |

REFERENCES

Liedtka, Jeanne. "Perspective: Linking design thinking with innovation outcomes through cognitive bias reduction." Journal of product innovation management 32.6 (2015): 925-938.

Schön, Donald (2017), The reflective practitioner: How professionals think in action. Routledge.

Seidel, Victor P., and Sebastian K. Fixson. "Adopting design thinking in novice multidisciplinary teams: The application and limits of design methods and reflexive practices." Journal of Product Innovation Management 30 (2013): 19-33.